NYC Computer Vision Traffic Map

Services like Google Maps typically rely on a combination of user GPS data and data from physical sensors. This project offers an alternative method for viewing traffic trends in NYC through computer vision.

The interactive map is shown below. Clicking on an area shows the greatest car count found, based on a trained car detection model. To view a specific camera, click directly on an outlined circle. You can toggle the circle heatmap off or switch to a less detailed map by hovering near the top right.

The map is currently set to update hourly.

Many thanks to the NYCDOT Traffic Management Center for providing access to these traffic cameras

To build this, I used a Haar Cascade Classifier with OpenCV. The interface is built using Folium, with AWS S3 acting as the backend. All of the traffic camera data is provided by the NYCDOT Traffic Management Center.

The heatmap provides a decent idea of the overall trend of traffic, but it does have a few pitfalls.

Unfortunately, the accuracy of the map isn’t great during the night due to glare and a lack of nighttime data in the vehicle detection model used. In addition, the model is trained for highway environments, so false positives from cameras in Manhattan are frequent. The tendency for Haar Cascade models to report false positives also doesn’t help; the model confuses crosswalks as a group cars pretty frequently. On the bright side, the accuracy for highways, notably in Queens, Brooklyn, and Staten Island, are generally great.

As of now, the level of traffic is strictly based on the amount of cars found, but I plan to convert it to a relative traffic level based on each camera’s detection history. This would help to weed out redundant cameras that are obstructed and lower the effects of consistent false positives.

If you want to see the code or generate the map locally, visit the GitHub repo

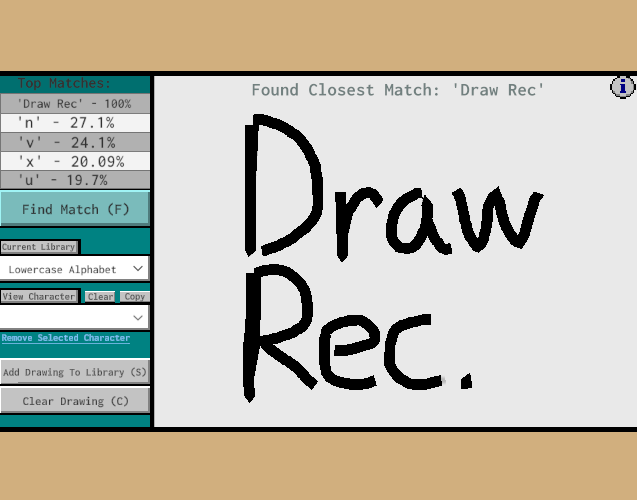

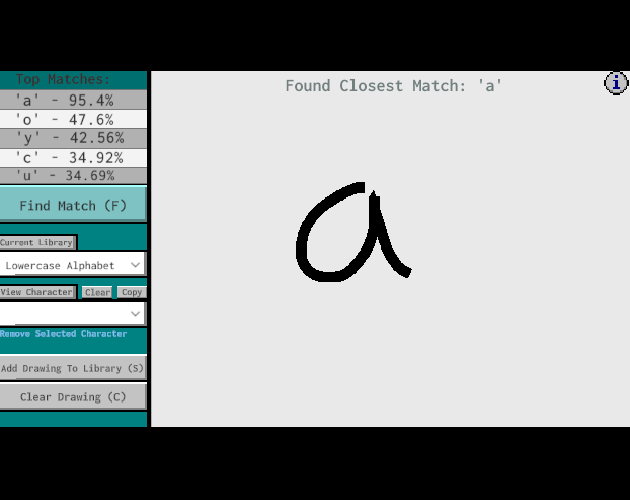

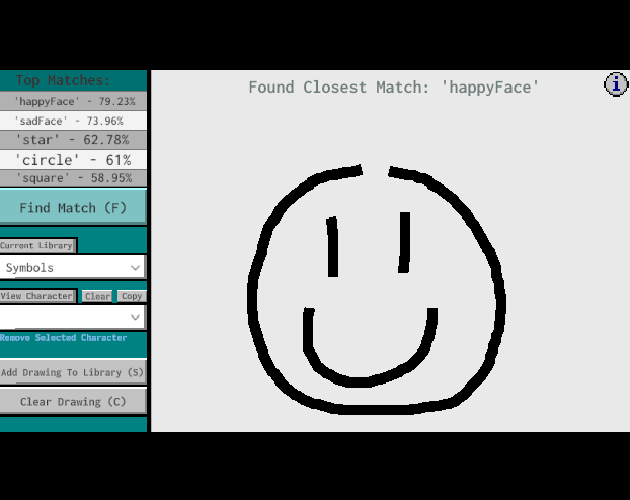

Unity Drawing Recognition

Unity Drawing Recognition is an algorithm and tool developed to enable character and drawing recognition in Unity projects. It can be adapted to support features such as gesture-based mouse controls, handwriting-to-text, and general discernment of a user’s mouse patterns.

The algorithm itself can also be used as a general approach to character and drawing recognition outside of Unity.

The recognition system operates by comparing an input drawing against stored libraries of characters. To define and store a character, only a single drawn example is required. This means it’s fast and convenient to create of libraries of custom characters and incorporate in-game mechanics that allow users to modify or add to these libraries during runtime.

To calculate a comparison score, drawn characters are converted to 4 different map representations that reflect the concentration of a character’s drawn points. During a comparison, 2 characters’ maps are directly compared and a similarity score is calculated.

This is a general outline of the process; for a more comprehensive explanation of the workings and detailed documentation, check out the GitHub Repo!

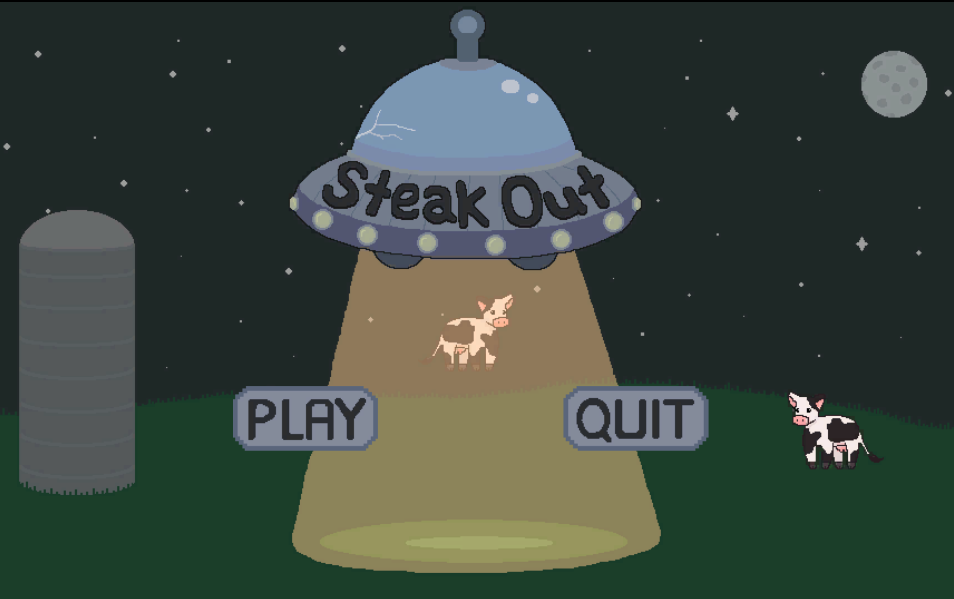

Steak Out

Gameplay Music + Sound Design

Steak Out is a Unity based stealth platformer about the journey of a brave cow that’s been abducted by a UFO. To escape, you’ll need to expertly maneuver around aliens and lasers while using the environment to your advantage.

Audio Assets

Sound Effects

Footsteps

Alien Footsteps

Cow Footsteps

These are compilations of footstep variations for each character. During gameplay, a random variation is played while walking via a unity script I implemented.

The footsteps are foley recordings with a little post-processing. The Alien’s was created by hitting a boot against a metal sheet, and the Cow’s was created by tapping a hard stick against the same metal sheet!

Miscellaneous

Checkpoint

Button Press

Teleport

Laser Hit

Laser Ambience

Aside from the button press, these sounds were created with Ableton’s Operator synthesizer. I processed the most effects with the reverb from the in-game soundtrack to make them fit better into the game’s environment.

Main Soundtrack & Ambience

Main Soundtrack

Ship Ambience

Once the game begins, these tracks play on loop in unison. I left room in the soundtrack’s low-mid frequencies to accommodate for the ship ambience, and for any possible changes in the game that might switch up the ambience.

The instrumentation of the main soundtrack is inspired by classic spy films like Mission Impossible and James Bond. To fit the otherworldly alien atmosphere, I experimented with adding effects to symphonic instruments from BBC’s symphony orchestra VST, ending up with this return track that nearly every instrument is routed to:

This is a reverb that plays an octave up with a slight delay, adding a ton of character to the horns and supporting instruments. I used Little Plate and Little AlterBoy from Soundtoy for this. In combination, they’re great for creating washed out, distorted effects, and for general reverb and pitch shifting purposes.